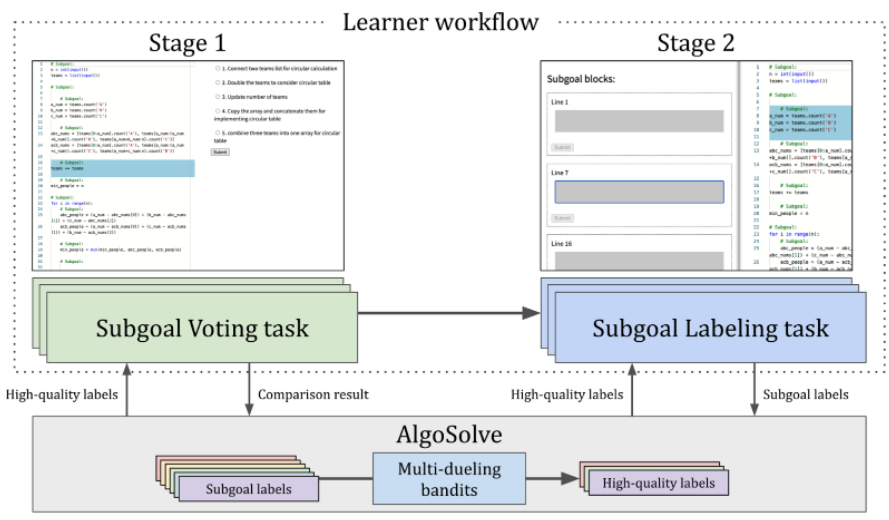

AlgoSolve is a learnersourcing system with two stages that guide learners to improve the quality of the subgoal labels. The workflow consists of two types of microtasks: 1) Subgoal Voting tasks where learners read through several subgoal label examples, compare the quality between them, and vote for the best example through system-generated multiple-choice questions, and 2) Subgoal Labeling tasks where learners create their own subgoal labels and iterate on their labels through comparisons against peer examples. With the comparison results and newly created subgoal labels, AlgoSolve identify high-quality subgoal labels by multi-dualing bandits algorithms, which contributes to the subgoal learning of future learners.

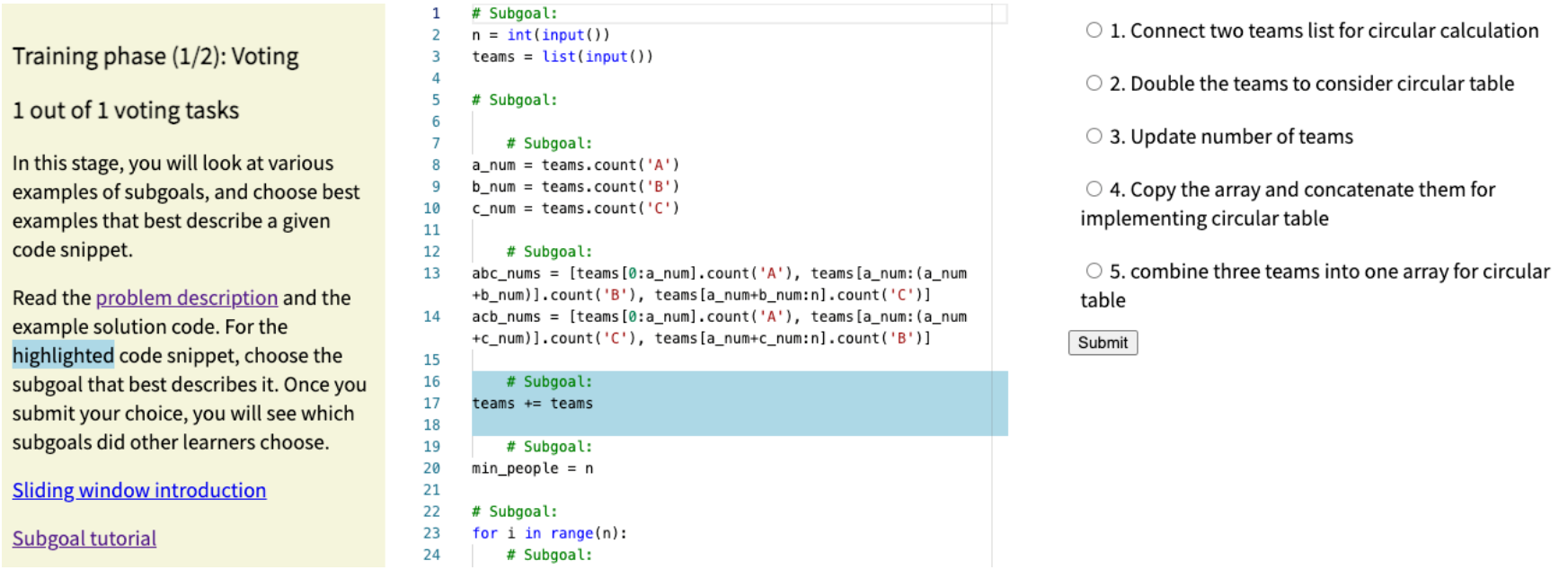

The Subgoal Voting task is designed as multiple-choice questions that ask learners to select a subgoal label example that best describes a given subgoal with the corresponding code segment. Learners are given up to five examples and select a subgoal label example that best describes a given code snippet. The system uses learners’ selections to determine the quality differences between subgoal labels and which labels to show to future learners as examples. By showing subgoal label candidates that explain the given subgoal, we expect learners to quickly form a good understanding of the solution presented in the worked example. To help learners learn what constitutes high-quality subgoal labels, the system provides both system-selected, high-quality subgoal labels and randomly-selected labels that are likely to be of lower quality compared to system-selected labels. Comparing the high-quality subgoal labels and low-quality subgoal labels helps learners realize what makes high-quality subgoal labels.

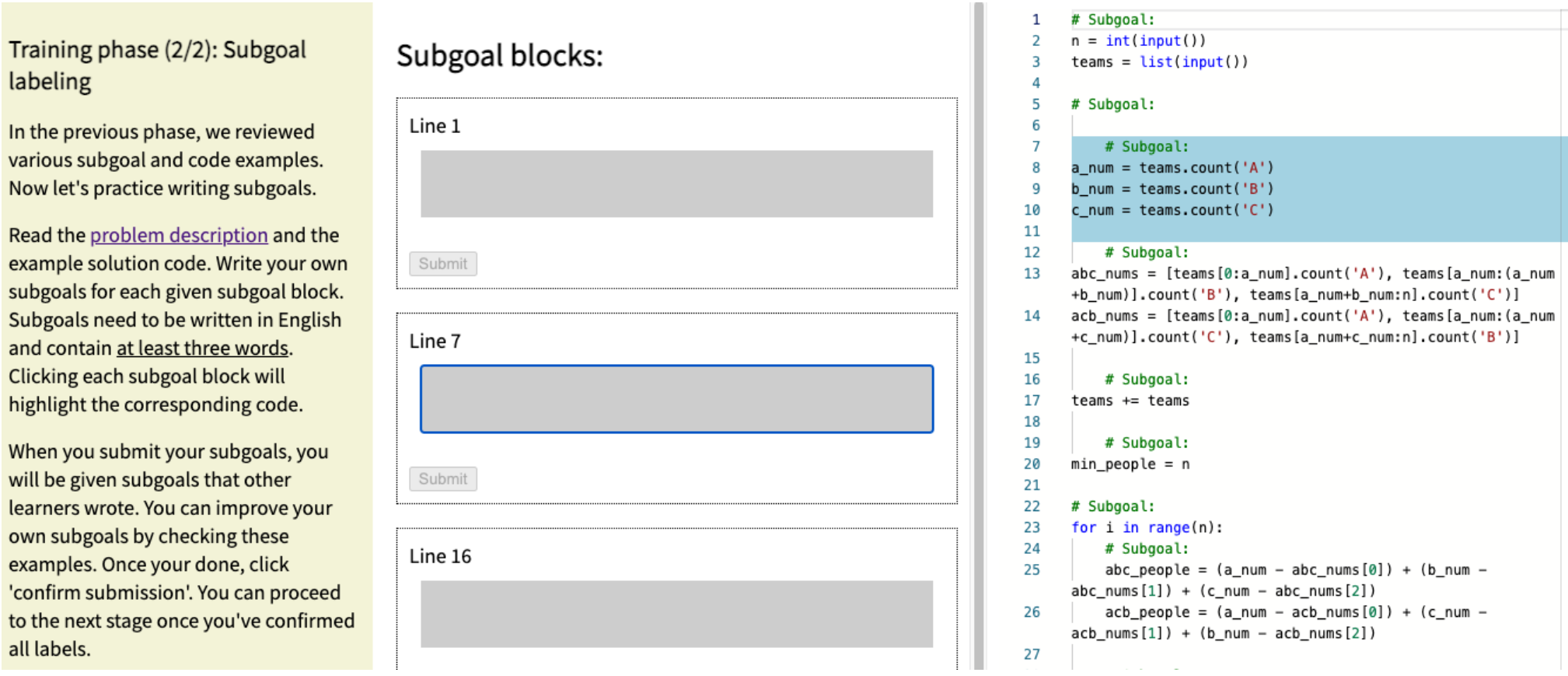

The Subgoal Labeling task asks learners to provide their labels in the Subgoal Labeling task. Learners first submit their initial work (i.e., initial subgoal labels) and then resubmit their final descriptions (i.e., final subgoal labels) after viewing feedback given by the system. The system provides three system-selected subgoal labels as feedback, where learners can make comparisons with their initial labels, revise errors or misconceptions in the initial labels, and make iterations. The final labels are collected by the system and provided to future learners.

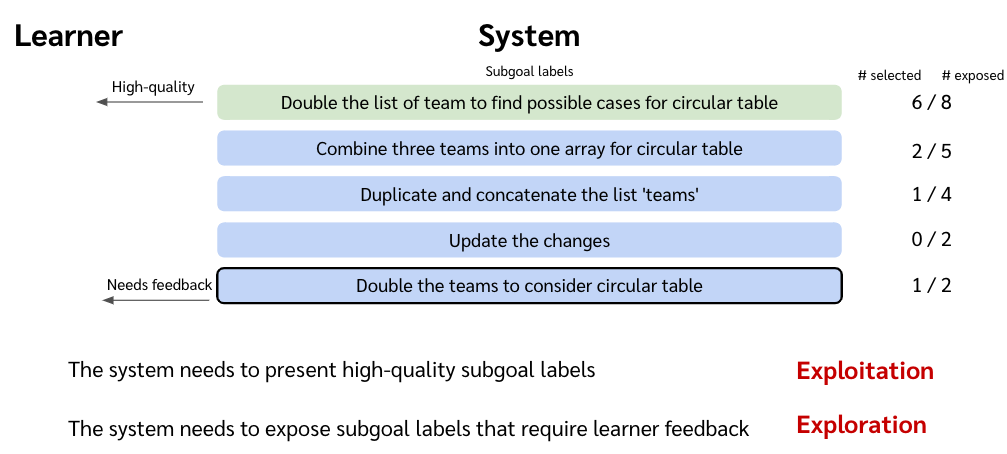

AlgoSolve identifies high-quality subgoal labels from learners' submissions of subgoal labels (Task 2) using comparsion results between subgoal labels (Task 1). It requires both exploitation and exploration; the system needs to not only present high-quality subgoal labels for meaningful subgoal learning but also ask for feedback on the collected subgoal labels to identify high-quality subgoal labels. We formulate this problem as a multi-dueling bandit problem, a variant of the multi-armed bandit problem where the algorithm selects multiple arms by comparison results. In particular, we used IndSelfSparring, a multi-dueling bandits algorithm that uses Thompson sampling. In order to incentivize newly added subgoal labels in the selection process, we gave the labels a high prior distribution of Beta(4, 1), meaning that the labels are likely to be chosen four out of five times.

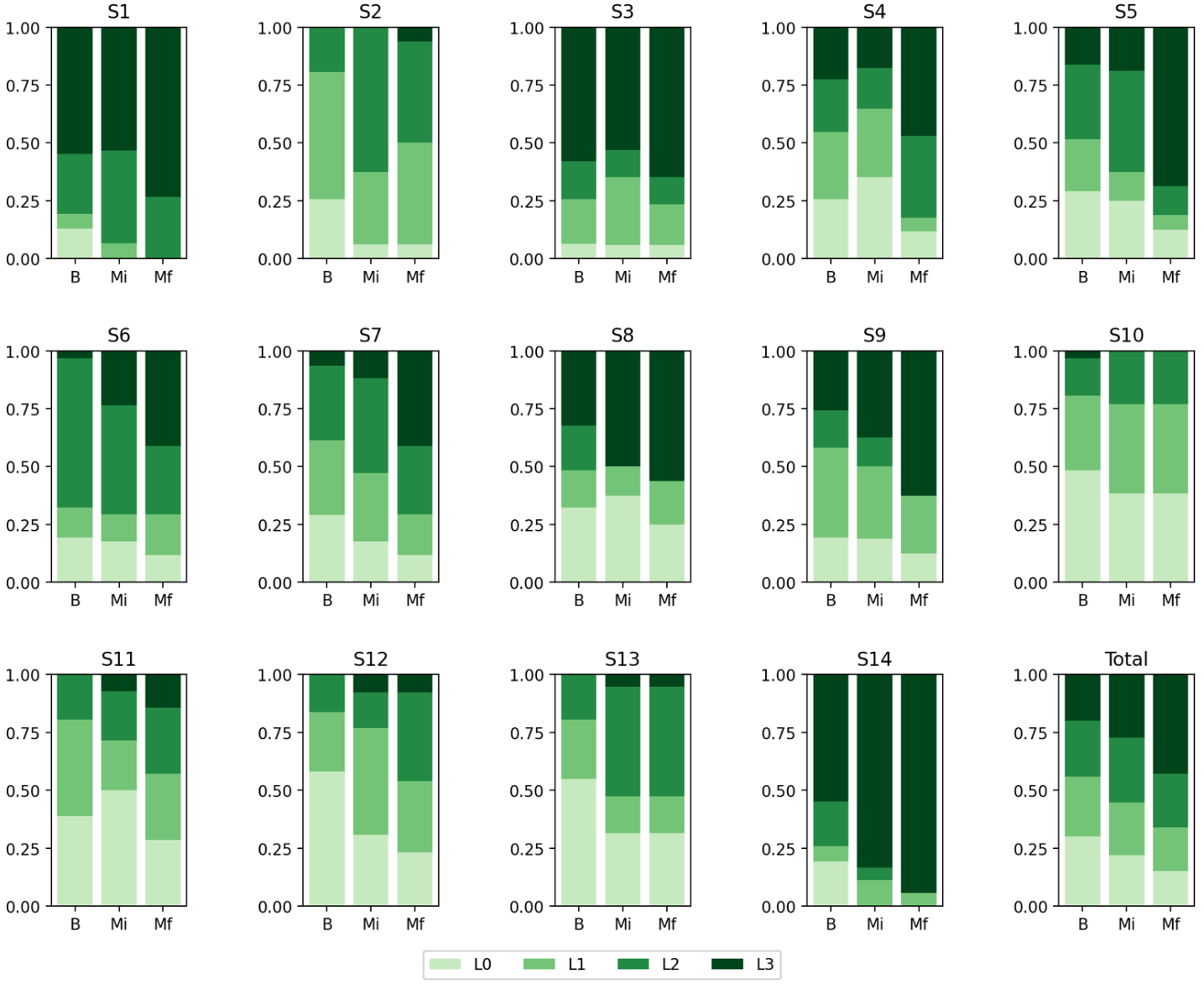

We evaluated AlgoSolve by conducting a between-subject study with 68 participants who are novices in algorithmic problem-solving. The baseline condition replicated the best performing method in subgoal learning where learners create labels on their onw, accompanied by expert-created subgoal labels as feedback. In AlgoSolve condition, learners were asked to use AlgoSolve for creating subgoal labels. We measured and compared the quality of subgoal labels created by learners between the two conditions. Results show that learners in the AlgoSolve condition were able to create better quality subgoal labels. The above graph shows the overall quality distribution of subgoal labels created by learners between the conditions where B refers to the baseline condition and Mf refers to the AlgoSolve condition. L0 means low-quality subgoal labels and L3 means high-quality subgoal labels.

@inproceedings{choi2022algosolve,

title={AlgoSolve: Supporting Subgoal Learning in Algorithmic Problem-Solving with Learnersourced Microtasks},

author={Choi, Kabdo and Shin, Hyungyu and Xia, Meng and Kim, Juho},

booktitle={CHI Conference on Human Factors in Computing Systems},

pages={1--16},

year={2022}

}